So, you are the IT department in charge of your home office backups. Congratulations, and let’s go to work.

Visual cues:

What to do Results Caveats Package to install

A few caveats, aka: »management of expectations«

When your systems have been compromised, never let any of them access your existing backups. Make sure, beyond doubt, that you are accessing them from uncompromised systems only. If you don’t know how to first restore your infrastructure to an uncompromised state, contact an IT professional who can, before you even think of plugging in your backups, anywhere.

Boot into Linux

Boot into a fairly recent Linux distribution. For this server-centric episode, we’ve used Ubuntu 20.04 LTS, and we would advise you to not base your setup on a rolling release Linux distribution.

Open a terminal and you’re ready to go.

Tools used, and their packages

btrfsbtrfs-progsbtrbkWe suggest you always install the most recent version, as described in its READMEcsvkitcsvkitcryptsetupcryptsetupiconvlibtext-iconv-perl- OpenSSH

openssh-server siegfriedWe suggest to follow the installation instructions on the homepage

What to fear, and what to observe

»People don’t want backups, they want restore«

»No backup, no sympathy«

Risks for, and threats to your data

Lets quickly summarize the most common, bad things that can happen to your data.

- Deletion You erroneously delete what you will never ever need again. Until two weeks after, that is – but then it’s gone. Or: a malevolent person starts to delete data, and it goes unnoticed for a while.

- Physical damage Hardware fails; you spill water over your device; natural disaster or a lightning strikes. A malevolent person deliberately damages your infrastructure.

- Loss Burglary; theft; confiscation; or you simply forget a device somewhere and it’s gone. Your data becomes unavailable to you – but maybe available to others.

- Deterioration Media or media state degrades (bit rot).

- Corruption Backup data gets written while it’s being modified, and the consistency of data items, or of their relationships goes down the bin; backups are partially overwritten or deleted, e.g. due to misconfigured backups, buggy software, or misguided maintenance attempts. A malevolent person actively manipulates your data. Software bugs cause corrupted live data states, which then slowly spread through your backup time-traveling states.

- Lockout You forget a password; your one-and-only hardware encryption key dies; new versions of your software (for backup, or for value creation) can’t open old backup or file versions; ransomware encrypts your data. A malevolent person steals the only security token holding the encryption key, or deletes all key copies from all of your media. Natural disasters prevent your access to offsite backups.

- You Temporarily or permanently, you become incapable of gaining or giving access to your backups (e.g., traveling; injured; being administered heavy medication; killed; imprisoned; kidnapped). Without proper precautions, you become a single point of failure for your system, leaving even the persons you had authorized without any data access options.

Reasonable backup principles

Over time, a few backup principles have proven invaluable guiding lines for mitigating or even eliminating the risks and threats we just mentioned:

- Consistency All data is backed up in consistent state, both individually (no ongoing changes of an item while it is being backed up) as well as with respect to implied or express relationships (no invalid relationships between data items, after a restore).

- Redundancy There are at least two backups.

- Time travel Your backup concept supports a sufficient amount of time-traveling capabilities, by offering several snapshots of past states.

- Separation Your backups do not share any physical medium with your live data.

- Air gapped At least one backup is »air gapped« (unplugged), by default.

- Offsite copy At least one of your backups is kept offsite, and there is absolutely no point in time when all backups and the media hosting the live data are in the same place.

- Periodical checks Your data and your backups are periodically checked for integrity, and for actual recoverability. Preferably while mounted on a different system than the one used to create and modify the data.

- Authorized electronic access Your live data and backups are guarded against unauthorized electronic access, via encryption and access control. There is somebody you have authorized and enabled to access them, on behalf of you.

- Authorized physical access Your live data and backups are guarded against unauthorized physical access, via physical access control. There is somebody you have authorized and enabled to access them, on behalf of you.

- 24⁄7 availability Your backups are accessible 24⁄7, for authorized persons. There is somebody you have authorized and enabled to access them in a timely fashion.

- Automation Your backups are automated. You can only restore backups that were actually made – no matter how stressed, distracted, lazy or unavailable you were.

- Value creation rules Business value gets created incrementally. The acceptable amount of lost work dictates the minimal backup frequency, the granularity and speed that is desirable when restoring backups, and the availability of restore options to authorized persons.

- The more trusted system takes control A more trusted backup system (e.g., a server dedicated to backing up data, only) will pull the data from less trusted systems. A less trusted backup system (e.g., the cloud) will have the data pushed to it, by a more trusted system. BTW: you never fully trust the more trusted system, either.

If you’re wondering how Peter Krogh’s venerable 3−2−1 rule fits into the picture: we challenge you to find the canonical interpretation of it, the one everybody agrees with. We think a list of individually targeted principles is more clear and helpful. And we think that Redundancy, Separation and Offsite copy combined make a very good equivalent for the 3−2−1 rule.

Common backup options, and how to improve them, today

Let’s review a few popular backup systems that may look familiar to you, and what you can do to improve on them, given what we just discussed.

Direct Attached Storage (DAS)

Essentially, Direct Attached Storage (DAS) is storage space that you plug into a computer. We’ll consider DAS in its most basic form: a single, external USB drive. In principle, what we say here applies to multi-bay, external drive enclosures as well.

Backups to an external USB drive, drag-and-drop style, check off but a few of the boxes. The rest is left to personal discipline, by default:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

An external USB drive is a good start, and certainly cheaper than a backup server. Here’s a few precautions to take, and improvements to make, today:

- Air gapped – Only plug in the drive for backups and periodical checks.

- Authorized physical access – If you cannot prevent unauthorized access to your office space, check off the box by locking the drive away, immediately after each use.

- Time travel, Authorized electronic access, Automation and Value creation rules – Set up a software solution with a backup schedule that matches your value creation intervals. BorgBackup is a good starting point (potentially using the Vorta GUI, for a more intuitive start).

- Offsite copy and Redundancy – Get a second external drive, and swap them after each backup. Always move the most recent backup offsite, away from your office. Never keep all backup media and the live data in the same location, but make sure authorized persons have 24⁄7 access to the drives, too.

By implementing these recommendations, you can arrive at:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

The NAS / fileserver regression

Simply adding a NAS appliance (or a fileserver) to your network, e.g. to »replace« an external USB drive, does not give you backups. Rather, this setup just replaces your live data storage medium by a networked alternative that is more accessible, by more people from more devices.

A dedicated setup like this usually combines several storage devices into a RAID array with a RAID level greater 0, hence you’ll get a bit of redundancy and protection against single disk failures – on this very NAS, or server appliance, only.

But backups only exist if they are created on separate media, so what you get at an initial level, with only minimal configuration, is likely:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

If you choose to amend the situation by keeping your »most important« data, and your »work in progress« on your desktop computer or notebook, and to »synchronize« that to the NAS, you have gained a bit of redundancy only, but your backup concept enters a slightly foggy state, with some guesswork where the current version is, per data item.

Here’s a few precautions to take, and potential improvements to make:

- Authorized electronic access – Enable encryption, and configure access control beyond the point of just letting every user account access everything.

- Time travel – Most NAS appliances support periodic snapshots, based on native snapshot capabilities of their internal file system, e.g. btrfs or ZFS). Also, since snapshots can be taken in virtually no time, this reduces Consistency issues. Still, this is not a backup yet, and Time travel is only locally available.

- The more trusted system takes control, Time travel, Separation and Offsite copy – If supported by its internal file system, your NAS or file server may allow for very fast remote replication of snapshots to another, remote NAS of the same brand, or to another remote server (with backup media formatted to the same file system). This would be a push, i.e. the system hosting your live data would have access to the backup NAS.

However, we strongly discourage you from setting up such push replication tasks on your live data system. Go for a pull, from elsewhere. For our reasons why, and ways how to, see below under Why your live data system shouldn’t do backups, and who should. - The more trusted system takes control, Offsite copy – If sufficient cloud storage space is available, and your live data system supports replication to it, you may be tempted to set up a push task for that. Have a look at the section on Data in the cloud below, for some caveats. Still we strongly discourage you from a push, controlled by your live data system.

If you follow all of these recommendations, you can arrive at:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

On Authorized electronic access and Authorized physical access

Things to do, on your live data system:

Authorized electronic access – Activate encryption, and choose an encryption mode that allows you to change passwords, or revoke encryption keys, should they be compromised. Authorized physical access – By all means restrict physical access to your NAS or file server. If you can’t: Neither have an encryption key stored on the NAS, nor have it read from an attached USB stick, or similar. Both would allow an attacker to just walk in, grab your valuable data plus the key, and access your data, later. If you can’t prevent this scenario, you’ll probably need an authorized person to provide a password, or to provide a key, upon every boot, to unlock your data. Depending on your network configuration, this may imply physical presence of an authorized person.Why your live data system shouldn’t do backups – and who should

Ransomware has evolved to a state where it actively searches for backups to encrypt, manipulate, or destroy – to increase pressure on you, by making existing backups worthless.

Since your live data needs to be much more accessible than your backups, your NAS (or fileserver) is by necessity easier to attack, and hence the lesser trusted system, compared to the system holding your backups. We consider it an unnecessary risk to expose any details about the backup process to a lesser trusted system, or to even turn it into the central controller for all backups:

- Attaching backup storage devices to your live data system is an invitation to ransomware, allowing it to corrupt your backups along with your live data, right away.

- Configuring your live data system to push backups to your backup system betrays the existence of your backups system, and potentially also its type and even access options.

- The same goes for configuring your live data system to push backups to the cloud.

Therefor, we strongly recommend to have the backup system, which is the more trusted system, control the backup process. The lesser trusted system just needs to be accessible, without knowing any details about how backups are made, stored, or secured. Strictly speaking, it shouldn’t even know that a backup system exists.

Create a separate, trusted backup system

There are several options to implement The more trusted system takes control, by setting up a system with the only purpose of creating, restoring, and periodically checking the integrity of backups.

- Some, but sadly not all NAS systems allow a backup NAS to pull data from a live data NAS. Invest into a backup NAS, and have it pull data from the live data NAS.

- Besides your fileserver, set up and harden a backup server, and have it pull data from the fileserver. Never mount the storage devices of the backup server on the live data server.

- If you insist on keeping backups exclusively in the cloud, set up a tiny machine that gets permission to pull data from your live data system, and push it into the cloud.

Last, but not least: With all variants, a restore is strictly a push to the live data system. There is no way the live data system knows anything about the existence of backups, let alone has access to backups.

With a separate, trusted backup system, you can arrive at:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

A more systematic approach

Craft a backup concept

1. Start with a bit of due diligence

A systematic look at your needs will result in two living documents that you need to keep up-to-date:

- a spreadsheet that tells you what categories of data exist, what their characteristics are, and how they’re backed up;

- plus a text that documents your process and responsibilities decisions.

Initially creating them means quite an effort, and will uncover questions you need to answer, and things you have ignored, so far. Maintaining these documents however is easy, and you’ll thank yourself a thousand times for the effort you’ve spent on them – every day you use them, and especially in case of an emergency.

Track valuable data item categories

Create a spreadsheet to record your categories of valuable data items.

Start with these columns:

- Data item category Name it in terms of business, not of tech.

- Location Directory / server / cloud storage provider

- Total size in MB or GB.

- Data format Simple files? Exported data dumps that can be re-imported?

- Backup automation possible? Can you pull, or export, automatically?

- Verification tool How can backed-up data items be verified, e.g. for conformance to a file type standard?

- Backup window Can you do a hot backup (while the software is modifying data) – or do you need to shut down the software, and create cold backups, within a limited time frame?

- Config backup Can you back up the configuration of the software that creates the items, and restore it?

- Retention period For how long do you need to keep a backup?

When the spreadsheet looks complete, cross-check it against all software that you are using to create valuable data items, and include what you had missed. If you discover considerable backup & restore problems with any software that you depend upon: would you consider transitioning to an alternative software?

Add your restore requirements: how much, how fast, by whom?

Extend the spreadsheet by the following three columns, and use them to pin down the exact consequences of the Value creation rules principle for your data:

- Acceptable loss How much work that went into such data items can you afford to lose, in terms of time, and be forced to re-create? This timeframe dictates backup frequency, and is commonly called Recovery Point Objective (RPO)

- Acceptable downtime For how long can data items of this type be unavailable? This timeframe is usually called Recovery Time Objective (RTO).

- Who may restore? Who must be enabled and authorized to restore this type of data items – or assist you with it?

Your answers will probably make you change your categorization of data items a couple of times, with spreadsheet rows being split up or merged. Whenever you tend to say »it depends«, the respective data item category probably needs a review.

Establish data structures that follow your business routines

Stick with structures that support your business, and devise a scheme that makes it a no-brainer to decide where to save data, and where to look for it, when you need it again. The scheme must be easy to follow on whatever software, storage system, or hardware you are going to implement it. Also, it must support your restore requirements.

Instead of falling prey to taxomania, start with a small but reasonable system, e.g. Tiago Forte’s »P. A.R.A.« (Projects – Areas – Resources – Archives) , and keep it simple.

Part of your business routines is who may see and modify what. At a minimum, figure out a reasonable user group structure (even if you’re working alone, and you’re basically your own group). If you need a finer granularity, learn how to handle Access Control Lists (ACLs).

Once you have designed a draft directory structure, pick a diverse and representative item sample set from your existing »datascape«. Try to figure out where each of them would go, and where you’d search for it. Refine the structure until you are fast enough, and satisfied. Along the way, discuss it with everybody who will need to stick to the scheme, and listen to their improvement suggestions.

Factor in privacy issues, and local privacy legislation Your scheme should allow to search for, and delete, personally identifiable data for which you can not, or no longer, prove a legitimate interest in. Existing business relationships will usually justify longer retention periods for backups of related data items. Without a business relationship, legal privacy requirements can heavily interfere with your backup scheme. We recommend to configure different retention schedules that conform to the respective legal requirements.

2. Process matters

Make a few important decisions, document them, and by all means print out that document:

People

Some questions may be easy to answer:

- Who will be allowed to store data? In a home office setting, probably only a few people.

- Who will backup data? In an ideal world, it’s all automated.

- Who needs to the skills to restore? In an ideal world, people who can store data also know how to restore it from a snapshot, or even a backup. In a less ideal context, you need at least two persons who are not very likely to become unavailable at the same time.

- Who will know which secrets? Per secret (encryption key, physical key, passphrase, …) you need a minimum of two persons who are not very likely to become unavailable at the same time.

Others are trickier:

- Who gets the option to restore? Ideally, it’s the person that directly works with the restored data – provided they’re knowledgeable enough to assess what exactly needs to be restored, and how it can be done.

»When the phone didn’t ring. I knew it was you.«

– attributed to Dorothy Parker

- Who will take care of notifications? Remember you’ll need both types of information: instant notification when something went wrong, but also a periodical assertion that your systems are ok. Dashboards may be fun to tinker with, and nice to look at – IF you look at them. However, a push notification channel is needed, too. Choose a proven and plain one, e.g. email.

Restoring from backups

Per spreadsheet item, decide about (and document, in additional columns):

- What is the required granularity of a restore? File? Directory? Complete database drop & restore?

- What are acceptable skill requirements, for a restore? Drag & drop, using a file manager? Mount a backup directory via FUSE? Database administration skills?

Backing up

- According to the spreadsheet you’ve created: which data gets backed up to where?

- According to the spreadsheet you’ve created: what are the acceptable costs (one-time, and recurrent) for backup and restore, roughly?

Do the math, and adapt you current backup locations accordingly, in the spreadsheet.

Periodical integrity checks (and repair)

Some integrity checks should be automated:

- Continuously check the hardware health, by setting up smartmontools

- Periodically verify and repair the file system metadata in read-only mode. On a btrfs file system, that would be a

btrfs check --readonly --force - Periodically verify and repair the disk content. On a btrfs file system, that would be a

btrfs scrub

Also recommendable:

- Periodical virus scans, e.g. via ClamAV

The ransomware catch

Traditionally, File Integrity Monitoring (FIM) relied only on factors like file content checksums, file sizes, and permissions to detect tampering. With some ransomware transparently encrypting and decrypting a whole file system, you might not even notice that the bytes on the storage medium are actually encrypted now – on an infected machine, everything would look unaltered, until the extortion begins and it’s too late to do something.

Comparing the file traits determined by the file server to what the backup server detected is inherently unreliable. Even assuming the backup server wasn’t infected, the traditional approach might not tell you whether the bytes that arrive at the backup server are still valid file content, or encrypted gibberish.

The situation can be slightly improved when you verify the types of files, at the backup server side. As long as the backup server hasn’t been compromised, that will give you (superficial) assurance that the files still conform to their alleged file types. Sadly, file type verification software mostly relies on checking a few signature bytes at known offsets in the files.

3. Assess your concept

Review risks and threats

From the perspective of a home office, it is probably wise to assume that all risks and threats mentioned above aren’t that unlikely, and that their effect on your business would probably be devastating, unless a backup process is in place.

Walk through the list of risks and threats, and pin down the respective, specific consequences, in your context. Cross-check the spreadsheet against that, and make sure you know exactly which risks and threats aren’t mitigated, for now. Any modifications to be made?

Review trust

List everything, and everybody you trust with respect to your data and backups. Especially if hypothesizing about trust issues with them sounds a bit far-fetched to you, or potentially offensive to others:

- An external disk drive, or a little NAS

- »The cloud«, and its availability

- Your home router, or WiFi mesh

- Your hardware security token that is integrated into your backup process

- A »backup solution« you’re paying for

- The friend or family member who keeps your backup media, just in case

- People who have unrestricted access to your office space

- …

As a thought experiment, for every item on that »trust list«, figure out the specific consequences of lost trust. Determine your remaining options for restoring your data, in those cases. Make sure you know exactly which kind of trust still renders your backups vulnerable. Any modifications to be made?

What is »offsite«?

Offsite options for physical media

Storing your backups offsite means they’ll linger in an untrusted place. In addition, that place may be hard to reach in the wake of natural disasters – provided it still exists (exactly how far away is »offsite«?). 24⁄7 access may be hard to implement, but air-gapped storage is of course trivial.

There are a few caveats with HDDs: Don’t store them for more than a year without spinning them up. As a rule of thumb, store HDDs lying flat, exposed to an »office climate«: not damp; no sudden or extreme temperature changes; no risk of shaking, dropping, or tumbling over. Store with reusable desiccant packs that you replace whenever you swap the media. Remember to pad and protect the drives during transport, too.

That being said, not all offsite options are created equal. We’ll list a few popular ones here with their specific caveats, plus our recommendation.

DAS or NAS kept in a safe deposit box

Your rent a safe deposit box either from a bank or a specialized business, and periodically swap the in your office with that in the the deposit box.

Using a safe deposit box to harbor your backup media will give you:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

Things to consider:

- 24⁄7 self-service can usually be guaranteed. You won’t be constrained by »hours of business«, neither during an emergency nor during a planned backup media swap.

- Authorized physical access: Is it permitted to hand over door key codes, access cards, or physical keys to an authorized proxy?

- NAS cases, or multi-bay external disk enclosures, aren’t usually compatible with form factors of deposit boxes. You’ll probably need to deposit individual disks. A regular 3.5″ HDD in a protective case takes up about 17cm x 14cm x 4cm of space. Measure the exact outer dimensions of your protective cases, inquire the exact inner dimensions of available deposit boxes, and check here which type of deposit box could accommodate your amount of disks.

- Deposit lockers may be operated by robots, so secure all HDDs in the deposit box, too.

- Minimal deposit costs per year roughly amount to the price of a large HDD.

- Safe deposit boxes have become rare at banks, the existing ones are in high demand, and you may end up on a waiting list. Inquire early. Also, it may be mandatory to open a paid bank account with them, which you are never going to actually use.

- Potential privacy issues at banks: Safe deposit boxes at banks may be subject to special legislation that decreases privacy even further.

DAS or NAS kept in a self storage compartment

Self storage is meant for objects that don’t fit into your basement anymore. It’s possible to find rental offers for climate-controlled, guarded spaces.

Self-storage space harboring your backup media will give you almost the same as a safe deposit box, except 24⁄7 availability:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

Things to consider:

- 24⁄7 access may be impossible to achieve, with access usually being unavailable at night time.

- Authorized physical access: Is it permitted to hand over door key codes, access cards, or physical keys to an authorized proxy?

- Available spaces are considerably larger than a safe deposit box, and even the smallest compartments can easily accommodate transport boxes with NAS cases, or multi-bay external disk enclosures secured inside.

- There is a high demand for the smallest self storage compartments. They may be unavailable for a prolonged period.

- Smaller self storage compartments may be operated by robots, so secure all HDDs in the transport box, and make it the storage box, too.

- Self storage centers usually require a considerable money deposit, to protect their spaces against vandalism.

- Minimal deposit costs per year roughly amount to twice the price of safe deposit boxes.

DAS or NAS kept by business partners, friends, family members

Asking business partners, friends, or family members to keep your backup devices has a similar profile as self storage, but cannot guarantee Authorized physical access:

ConsistencyRedundancy

Time travel

Separation

Air gapped

Offsite copy

Periodical checks

Authorized electronic access

Authorized physical access

24⁄7 availability

Automation

Value creation rules

The more trusted system takes control

Things to consider:

- 24⁄7 access may be impossible to achieve, considering work holidays and vacations.

- Authorized physical access will most likely not be enforced.

- Handling and storage may be suboptimal, in terms of temperature, moisture, and mechanical handling.

You could grant somebody else a share of your NAS storage capacity that they can access remotely, in return for the same. As an alternative, you could host somebody elses remote NAS in your office.

While it sounds like a great idea, there’s more trouble to be expected than just having to set up disk quotas on mutually shared NASes; making emergency arrangements for vacations; preventing unauthorized physical access; or poking a hole into your firewall, to make your, or somebody elses, NAS available on the internet. Depending on who you partner with, even more issues keep popping up:

Hosting provider – Do you want to invest into a mandatory rack mount? Are you willing to pay more for a colocated NAS that is your own, than for renting a dedicated root server sitting in the same center?

Partner businesses – Will one of the businesses involved outgrow the other, and cancel the agreement? How will asymmetrical costs be split? What happens in the case of acquisitions and mergers?

Family members – Do they have sufficient administration skills (e.g., for setting up dynamic DNS, after a home router model change)? How would you rate their security awareness? Also, does their upstream internet connection speed match what you need for your downloads, in case of an emergency?

Friends – For how long will you be close friends? (sorry for the brutal question)

In short, we can’t really recommend this option.

Virtual offsite options

Storing your backups offsite means they’ll linger in an untrusted place. Client-side encryption is a must. Securing near-24⁄7 availability all along the path from your cloud storage provider to your local router can be challenging. Air-gapped storage can only be partially emulated, e.g. via immutability. Also, software (installation) options at the remote end may be limited, or nonexistent.

Do the math: internet connection speed @homeoffice

Consider the following minimal transfer times, via a business-grade, symmetrical 1 Gbps upstream & downstream internet connection vs. a typical, asymmetric »home« connection at 30 Mbps up & 100 Mbps down:

| 1 Gbps up & down speed | 30 Mbps up, 100 Mbps down speed | |

|---|---|---|

| Upload 1 GB | ≥ 8s | ≥ 4m 46s |

| Download 1 GB | ≥ 8s | ≥ 1m 25s |

| Upload 1 TB | ≥ 2h 23m 16s | ≥ 3d 9h 30min 10s |

| Download 1 TB | ≥ 2h 23m 16s | ≥ 1d 27m 3s |

Calculate this for your connection.

Home office workers might find their upload times prohibitive, and download times barely in line with their restore requirements. Unstable internet connections, or forced nightly disconnects by their ISP might require solutions that can resume after a disconnect, without starting all over.

While incremental backups might be feasible within a tolerable time frame, both the initial full backup, as well as a full restore of maybe several TB of data, will require patience and time that you might not be able to afford.

Cloud storage

With respect to cloud storage, there is a multitude of caveats to consider, before (literally) buying in:

- Beware of the costs for both a mere download (egress), and the long-term storage of large volumes of data Providers love to specify seemingly »insignifant« costs by the GB (like, 0.023 US$ per GB and month; plus 0.09 US$ egress fees, i.e. download fees per GB). These costs will add up: for 10 TB of data, in our example, the bill is 230 US$ per month for storage, and 900 US$ for every download, when you need a full restore. You may find yourself pressed to reduce the amount of data that gets backed up, and hence compromise beyond what’s reasonable.

- Privacy comes at an extra cost. Both storage and egress fees are higher when you want to, or even need to have your backups hosted in countries where privacy legislation is strong.

- 24⁄7 availability may be at risk, and depend on availability levels guaranteed by the cloud storage provider; as well as on the availability of high-speed internet access at restore time, of course, which may be a problem when natural disasters kill off the infrastructure in your region. Finally, consider law enforcement by the authorities of the country your storage provider operates from: you may be locked out of your backups.

- Remember that many popular cloud storage options don’t support the syncing of file system level snapshots (btrbk-style, or ZFS-style) from a NAS. On the other hand, relying on specialized providers offering just that could easily get you locked in. Avoiding that, you may end up having Time travel options only on your local NAS – unless you fall back to a file-system agnostic approach for syncing snapshots to the cloud.

- Most large-scale cloud storage providers require you to use their proprietary APIs for access. That means you need to set up your backup solution to use that API – not just for backups, but also when you’re under stress, need a restore, and maybe lack most of your infrastructure, due to an emergency. Smaller providers may offer a wider choice of access options (including SSH into a plain file system directory), at a better price, but not mirror your data to anywhere else in their data center.

- Support may only be available in the English language.

Here’s a few precautions to take, and potential improvements to make:

- Separation and Offsite copy – Schedule a periodical backup to cloud storage.

- Authorized electronic access – Always enable client-side encryption when pushing data to cloud storage.

Virtual Private Servers (VPS)

Since affordable disk storage options offer around 100–300 GB only, and storage beyond that becomes prohibitively expensive, renting a VPS isn’t a reasonable option for offsite backups.

Dedicated root servers

A physical server nobody else has access to, where you can install both the operating system and the software of your choice, while enjoying a fixed IP, and most likely the safety net of a (software) RAID. Both unlimited traffic and free replacement of failing disks is a must – read the fine print, please.

- Costs may be acceptable, and can be reduced. Besides their standard machines, some hosting providers are offering »refurbished« server models that would usually be decommissioned. Make sure the deal includes at least 2 HDDs for a RAID1 configuration, and includes both replacement for failing disks, and unlimited traffic.

- You may need to monitor the RAID health on your own, and depend on the service of the provider to exchange failing disks.

- Support may only be available in the English language.

A basic example using two Linux servers, FLOSS-only

What it takes: You’re a reasonably experienced Linux user who can install the Linux distribution of your choice, plus a few extra software packages on a machine. You’ve already worked with a text editor on a terminal.

We suggest you experiment with the approach first (see hardware requirements), instead of diving straight into production use. In production use, you’ll probably want to add more btrfs subvolumes that match your business structure (see above), to accommodate for diverse backup needs.

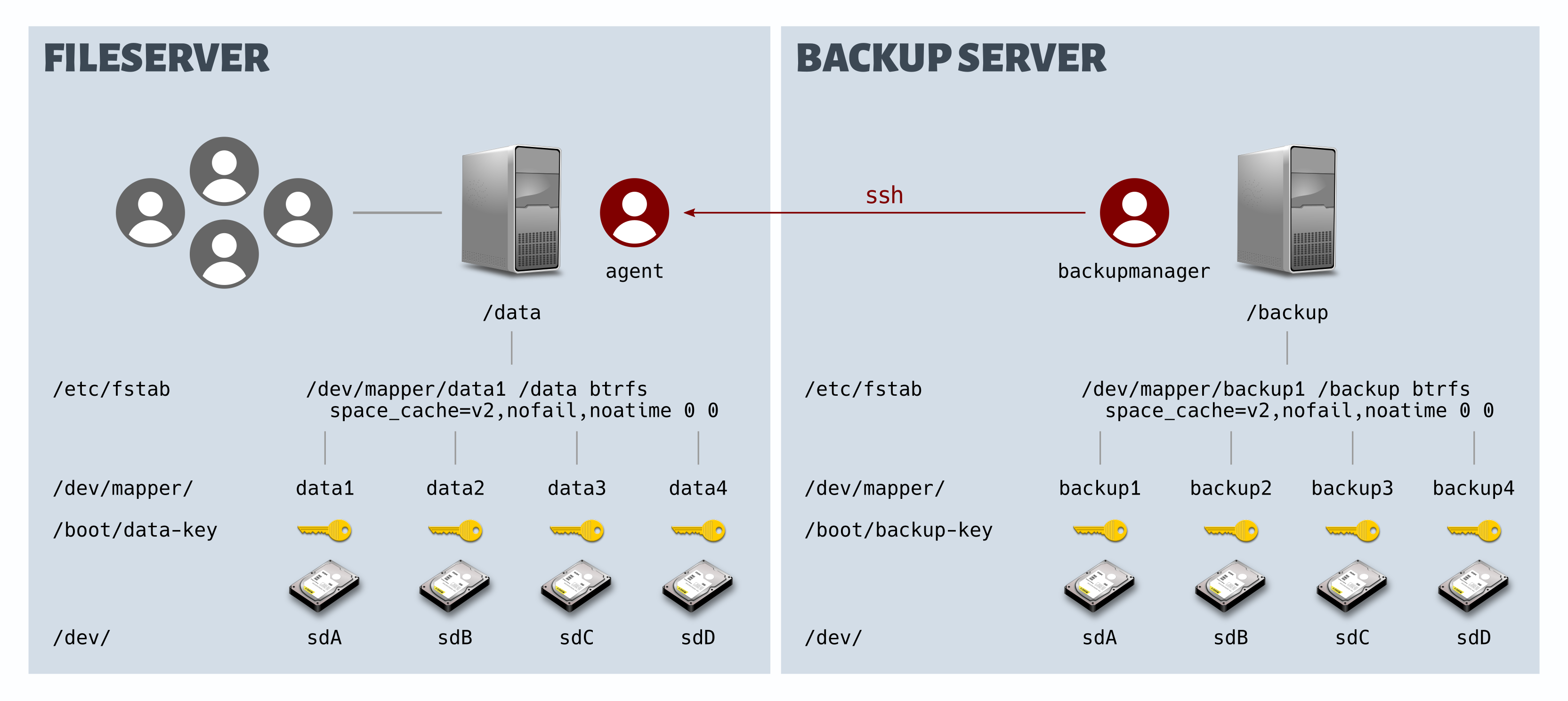

Here’s how you can implement a sound backup scheme, based on two machines running FLOSS under Linux:

- A »fileserver«, possibly with a variety of features besides serving files to your devices, and most likely reachable from the internet. Hence the lesser trusted system.

- A »backup server«, used exclusively for this purpose. Hence, the more trusted system.

Advantages:

- No proprietary NAS hardware or software involved.

- No hardware RAID support required.

- Storage media, including the current state of your live data or backups, can be moved over to a new / replacement computer, and will simply continue to work.

- You can add more features to the fileserver using it’s regular package manager, or install Docker containers.

Disadvantages:

- Resulting system may be bulkier than a NAS.

- One-time setup, and maintenance, requires manual work that isn’t supported by a GUI.

- Power consumption of recycled old server hardware may be higher than for a NAS.

- Additional features can’t be conveniently pulled from a proprietary app store.

The steps we’ll take:

- Required hardware and software

- Setup a snapshot-enabled, encrypted

/datavolume on a fileserver; plus, a/backupvolume with identical characteristics on a separate backup server. - Configure periodic snapshots & pull-backups

- Configure backup integrity checks

- How to restore files

1. Required hardware and software

For experimenting and getting familiar with the approach

Fileserver and backup server: Repurpose two old computers, if you wish. Install a Linux distribution that you are familiar with, on both. We recommend a minimal server distribution, e.g. Ubuntu Server.

If not installed by default, add these on both machines:

- OpenSSH Server

- btrfs-progs

Finally, make sure you can log in via ssh to both machines.

In addition, you’ll need one spare disk (internal or external) per machine, for the /data and /backup volumes, respectively. One old USB drive per machine will do, you can even use USB sticks.

For production use

Fileserver: Ideally, you can repurpose an existing computer with decent performance characteristics, but low power consumption. Ensure 16GB RAM or more are installed; at a minimum, Gigabit Ethernet is available; and an internal 4x or 8x disk cage, for optimal speed.

If the computer lacks an internal disk cage, an external 4‑bay or 8‑bay disk enclosure might be an option, although speed may suffer. If that’s not a problem, you might even give a try to a mini PC that you had already taken out of service – provided it has at least a USB 3.0 port.

Backup server: For the backup server, you could set up hardware that is identical to the file server configuration. However, especially after a first full backup, there will be mostly incremental backups only, which means that considerably less powerful hardware is usually sufficient. E.g., it’s feasible to pick a Raspberry Pi 4, or similar (as long as it sports a USB 3.0 interface, as a minimum).

2. Create snapshot-enabled, encrypted volumes using btrfs and LUKS

The btrfs file system is widely available under an undisputed open source license. It natively supports snapshots, but not encryption. dm-crypt and LUKS can help with that. Here’s how to set up a btrfs file system that sits on top of multiple encrypted devices, and gets unlocked and mounted at boot time.

We’ll show an example for creating the /data volume on a fileserver. The /backup volume on a separate backup server is created in the same way, just substitute »backup« for »data«.

In our example, we’ll use 4 devices /dev/sdA … /dev/sdD, and create a btrfs file system that will be mounted as /data, at boot time.

/dev/sdA, to help you avoid copy & paste disasters. Always make sure you are really (!) working with the devices intended for this.Generate a random encryption key

The length of the key file will be 4096 bytes – a good default, but other lengths would work as well. Only

rootshall have access to it.dd bs=1024 count=4 if=/dev/random of=/boot/data-key iflag=fullblockchown root:root /boot/data-keychmod 600 /boot/data-key/boot/data-keyThe resulting key file will be used to unlock the drives, and can be stored anywhere where its accessible during boot time. If you lose it, you lose access to your files, forever. So make at least two backup copies of the key file, on separate media that you periodically check for integrity, and lock the backups away in a place accessible to authorized persons only.

Encrypt all devices

For each of the 4 devices

/dev/sdA…/dev/sdD, overwrite them once and completely, with random data. This will take a while, so wipe all four devices in parallel. Example for the first one:shred -vn 1 /dev/sdAAfter completion, encrypt each of the devices with the key file, as shown here in the example for

/dev/sdA:cryptsetup -v luksFormat /dev/sdA /etc/data-keyIn case you can’t enforce only authorized physical access to your office space (or fear raids, confiscation, burglary), you can skip key file creation, and omit the key file name from the above command. As a consequence, you’ll be asked for an encryption passphrase (use the same for all devices). Later, on every boot, you’ll need to type the passphrase in, for every encrypted device (see below); obviously, this implies that you need a keyboard attached to the server, and possibly a tiny monitor.In theory, you might store an encryption key on a USB stick (there are configuration options for this). However, it’s only a matter of time until you will forget to pull it out after all devices have been unlocked, during boot. Even if you remember to do that, you’ll probably not take the time to immediately hide the stick at an offsite location – which leaves you without defense in case of a search warrant, or a body search.

Create the btrfs file system

Once all 4 devices are encrypted, you can unlock them, and have them mapped, as shown here:

cryptsetup luksOpen --key-file=/boot/data-key --type luks /dev/sdA data1cryptsetup luksOpen --key-file=/boot/data-key --type luks /dev/sdB data2cryptsetup luksOpen --key-file=/boot/data-key --type luks /dev/sdC data3cryptsetup luksOpen --key-file=/boot/data-key --type luks /dev/sdD data4/dev/mapper/data1…/dev/mapper/data4

Formatting to btrfs implies choosing the amount and type of redundancy you want for -data and -metadata. Those decisions can be changed later, to an extent not possible with other software or hardware solutions.

As examples, we’ll give two example setups here, and discourage you expressly from using the second one for production purposes:

Example A, for production use – Create a file system that resembles a traditional RAID1 setup across all devices, hence will protect you against failure of one of the physical drives. 50% of the combined device capacities will be available for your data.

You can even set up btrfs to use a

raid1profile across multiple devices of different types and capacities, as long as the capacity of the largest device does not exceed the capacity of all others combined. If it does, the excess capacity will go unused.mkfs.btrfs -m raid1 -d raid1 /dev/mapper/data1 /dev/mapper/data2 /dev/mapper/data3 /dev/mapper/data4

Example B, for testing and learning purposes – Create a file system that resembles a traditional RAID1 with respect to metadata, but keeps only 1 copy of the real data, to give you as much disk space as possible, e.g. for experiments using a »leftover« mix of wildly differing physical drives.Obviously, this approach results in tremendous risks: a single broken device results in complete data loss. The more devices you have, the greater that risk. With large amounts of data to be restored, you’ll also face a considerable downtime of likely days while you replace the broken device, re-create the btrfs file system from scratch, and restore your data from a backup. Which, hopefully, didn’t die at the same time. In short: don’t use this, in production.mkfs.btrfs -m raid1 -d single /dev/mapper/data1 /dev/mapper/data2 /dev/mapper/data3 /dev/mapper/data4If you are experimenting, and using a single USB drive only, substitute

dupforraid1.

Some hints:

- btrfs profiles other than

raid1andsingleare summarized in the wiki. - Using

raid5orraid6is currently discouraged. - Play with the btrfs usage calculator to preview the results of your formatting decision.

Mount the file system once

mount -t btrfs -o noatime /dev/mapper/data1 /mnt/datacd /mnt/dataCreate your desired subvolumes, e.g:

btrfs subvolume create @

Have it unlocked and mounted, during boot

Have the encrypted devices unlocked

Look up the UUIDs of the encrypted devices:

blkid --match-token TYPE=crypto_LUKSThis should yield a list of UUIDs, including those of the encrypted devices, similar to:

/dev/sdA: UUID="53c98e6d-4659-4ac0-98b2-f6027802ee51" TYPE="crypto_LUKS" /dev/sdB: UUID="c93f82b0-6b51-4615-b0b2-15e0300ca614" TYPE="crypto_LUKS" /dev/sdC: UUID="9113704c-dac6-40b6-a4fc-fd4364728517" TYPE="crypto_LUKS" /dev/sdD: UUID="911dc82a-3016-4cba-b4ca-b5b7c8ffce0b" TYPE="crypto_LUKS"Add the devices to

/etc/crypttab, to have them unlocked during boot time using the secret key file, and mapped automatically:data1 UUID=53c98e6d-4659-4ac0-98b2-f6027802ee51 /boot/data-key luks,nofail data2 UUID=c93f82b0-6b51-4615-b0b2-15e0300ca614 /boot/data-key luks,nofail data3 UUID=9113704c-dac6-40b6-a4fc-fd4364728517 /boot/data-key luks,nofail data4 UUID=911dc82a-3016-4cba-b4ca-b5b7c8ffce0b /boot/data-key luks,nofailIn case you can’t enforce only authorized physical access to your office space (or fear raids, confiscation, burglary), you can encrypt the devices via a passphrase (see Encrypt all devices, above), and replace the key file name by the word »none«, here. You’ll be asked for the passphrase on every boot, for every encrypted device.

Finally, have the file system mounted

It is sufficient to specify only a single of the four mapped devices, in

/etc/fstab. Some hints with respect to mount options:

space_cache=v2increases performance for huge data or backup volumes, at the cost of more metadata held in memory.nofailis recommended since unlike a common RAID, a btrfs file system will not let itself be mounted in degraded state (missing or broken HDD) unless you expressly add thedegradedoption, for repair. In other words, the machine wouldn’t boot after a drive has failed./dev/mapper/data1 /data btrfs space_cache=v2,nofail,noatime 0 0Instead of the default subvolume, you can also mount a specific subvolume that you have already created, by adding the

subvoloption along with a relative path:/dev/mapper/data1 /data btrfs subvol=@,space_cache=v2,noatime 0 0

3. Configure periodic snapshots & backups

For increased security, we’ll implement a strict separation of concerns:

- The fileserver will periodically create and delete local snapshots of

/data, according to its own, separate configuration. No other device has write access to these snapshots. The local snapshots created can be made available, read-only, to users; this will give them a limited time traveling option on the fileserver. - The backup server will periodically log in to the fileserver, and pull copies of the existing snapshots to its volume

/backup. It will have a separate retention policy configured, and delete only its local copies of snapshots. - We’ll create a user called

agenton the fileserver that has a strict read-only access to/data. We’ll create a userbackupmanageron the backup server to use a password-less ssh login to the accountagenton the fileserver, for pulling snapshot copies for a backup.

Preparing users backupmanager and agent

On the backup server, create user

backupmanager, and allow them to usesudo:sudo useradd -d /home/backupmanager -m -s/bin/bash backupmanagersudo usermod -a -G sudo backupmanager/home/backupmanagerThen create an ssh key pair for

backupmanager(when prompted for a password, leave it empty and just press Enter):sudo su backupmanagerssh-keygenYou should see output similar to:

Generating public/private rsa key pair. Enter file in which to save the key (/home/backupmanager/.ssh/id_rsa): Created directory '/home/backupmanager/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/backupmanager/.ssh/id_rsa Your public key has been saved in /home/backupmanager/.ssh/id_rsa.pubCopy the public key

/home/backupmanager/.ssh/id_rsa.pubto a USB stick and rename it toauthorized_keys, there. Open it with your favorite text editor and remove the trailingbackupmanager@<backupserver>, for a bit of camouflage. Make sure you don’t inadvertently introduce any line breaks, while editing.

On the fileserver, create useragent, and allow them to usesudo; also, add an empty subdir.sshto their home directory:sudo useradd -d /home/agent -m -s/bin/bash agentsudo usermod -a -G sudo agentmkdir ~/.sshchmod 700 ~/.ssh/home/agent/.sshNote how we have neither set a password nor created an ssh keypair here, because we’ll establish the public ssh key of the backup server’s

backupas the only key that is authorized for a login in to the account ofagent, on the fileserver:Plug in the USB stick to the fileserver, and copy

authorized_keysto/home/agent/.ssh:/home/agent/.ssh/authorized_keysEnsure tight access restrictions:

sudo chown agent:agent /home/agent/.ssh/authorized_keyssudo chmod 600 /home/agent/.ssh/authorized_keysNow verify on the backup server that

backupagenthas a working, password-less login in to the accountagenton the fileserver (substitute the name or IP of your fileserver for<fileserver>):sudo su backupmanagerssh agent@<fileserver>Returning to the fileserver, we’ll allow

agentto execute just the commands required for pulling a backup, without a sudo password being requested. All of these commands are effectively read-only, and we’ll further restrict their file access to/data. On the fileserver, create/etc/sudoers.d/agentwith this content:Cmnd_Alias USAGE=/usr/bin/btrfs filesystem usage * /data Cmnd_Alias SEND=/usr/bin/btrfs send /data/* Cmnd_Alias SENDP=/usr/bin/btrfs send -p /data/* /data/* Cmnd_Alias SVOLLIST=/usr/bin/btrfs subvolume list * /data Cmnd_Alias SVOLSHOW=/usr/bin/btrfs subvolume show * /data Cmnd_Alias READLINK=/usr/bin/readlink Cmnd_Alias TEST=/usr/bin/test agent ALL=NOPASSWD:LIST,USAGE,SEND,SENDP,SVOLLIST,SVOLSHOW,READLINK,TESTMake sure access to it is restricted:

chmod 640 /etc/sudoers.d/agent

Periodically creating snapshots on the fileserver

Enter btrbk, a single script that provides high-level snapshot and backup capabilities for btrfs file systems. If you have used rsnapshot before, many of the btrbk concepts will look familiar to you.

For the sake of testing, we’ll create a subvolume for photographies in

/dataand a subdirectory that will hold all snapshots of it:cd /datasudo btrfs subvolume create photossudo mkdir /data/btrbk_snapshots/data/photos,/data/btrbk_snapshotsNow please copy a few images to

/data/photos, to play with.

On the fileserver, using your favorite editor, create this btrbk configuration in/etc/btrbk-snapshot.conf:# Local time machine - Create snapshots only timestamp_format long-iso # Local snapshot retention snapshot_preserve_min 3d # Keep all snapshots for 3 days snapshot_preserve 31d # Keep one snapshot each for the past 31 days # Subvolumes to create snapshots from, in snapshot_dir volume /data subvolume photos snapshot_dir btrbk_snapshots/photosWhile it is possible to just dump all snapshots of all subvolumes into/data/btrbk_snapshots, the result will quickly look like an unstructured mess, especially when you’re making/data/btrbk_snapshotsavailable to users, for their time travel needs. For easier navigation, we recommend to create a separate snapshot subdirectory for each subvolume, as shown for/data/btrbk_snapshots/photoshere.

Since btrbk does not create snapshot directories on demand, we’ll have to do that:sudo mkdir -p /data/btrbk_snapshots/photosNow verify everything works as expected by executing a »dry run« (no actual changes being written):

sudo /usr/sbin/btrbk -n -v -c /etc/btrbk-snapshot.conf snapshotIf the output looks as expected, run the real command:

sudo /usr/sbin/btrbk -q -c /etc/btrbk-snapshot.conf runWhen it has finished, a timestamped snapshot should have been created under

/data/btrbk_snapshots/photos.

You can now automate taking snapshots

While it may be tempting to just add a simple crontab entry for user root, for taking snapshots, it is considered (very) bad practice to use root this way.

Rather, create another usersnapshotteron the file server, and allow it to usesudo:sudo useradd -d /home/snapshotter -m -s/bin/bash snapshottersudo usermod -a -G sudo snapshotter/home/snapshotterAlso, we’ll allow

snapshotterto execute the snapshotting command without a sudo password being requested. Create/etc/sudoers.d/snapshotterwith this content:Cmnd_Alias SNAPSHOT=/usr/sbin/btrbk -q -c /etc/btrbk-snapshot.conf run snapshotter ALL=NOPASSWD:SNAPSHOTYou could now add e.g. this to the crontab of the fileserver, to create a snapshot every 15 minutes, and to discard old snapshots, after their configured retention period:

*/15 * * * * snapshotter sudo /usr/sbin/btrbk -q -c /etc/btrbk-snapshot.conf run

Periodically pulling backups

On the backup server, using your favorite editor, create this btrbk configuration in/etc/btrbk-pull-backup.conf. Substitute the IP (recommended) or the name of your fileserver for<fileserver>:timestamp_format long-iso # Use own credentials to log in as user 'agent', on fileserver ssh_identity /home/backupmanager/.ssh/id_rsa ssh_user agent # Make 'agent' run their commands via sudo backend_remote btrfs-progs-sudo # Backup retention: E.g., keep one snapshot for each of # the past 24 hours, 31 days, 4 weeks, 12 months, and 10 years target_preserve_min no target_preserve 24h 31d 4w 12m 10y # Pull snapshot copies of the given subvolume from snapshot_dir # on fileserver, and copy them to target on backup server volume ssh://<fileserver>/data subvolume photos snapshot_dir btrbk_snapshots/photos snapshot_preserve_min all snapshot_create no target send-receive /backup/<fileserver>/photosWe need to create the target directory for the pulled snapshots, too:

sudo mkdir -p /backup/<fileserver>/photosNow verify everything works as expected by executing a »dry run« (no actual changes being made). Log in to the backup server, and then:

sudo btrbk -n -v -c /etc/btrbk-pull-backup.conf resumeIf the output looks as expected, run the real command:

sudo btrbk -q -c /etc/btrbk-pull-backup.conf resumeWhen it has finished, a timestamped snapshot should have been pulled from the fileserver, to

/backup/<fileserver>/photos. Repeated execution will not always result in a pull, because the retention policy for backups (24h) says we just keep one pulled snapshot for each of the past 24 hours, on the backup server. Hence, the next pull will only occur an hour after the last one.

You can now automate pulling snapshots for backups

Since we already have user backupmanager on the backup server, we just need to allow them to execute the pull command without a sudo password being requested.

Create/etc/sudoers.d/backupmanagerwith this content:Cmnd_Alias PULL=/usr/sbin/btrbk -q -c /etc/btrbk-pull-backup.conf resume backupmanager ALL=NOPASSWD:PULLYou could now add e.g. this to the crontab of the backup server, to pull snapshots every 30 minutes past the hour, and to discard old snapshots, after their configured retention period:

30 */1 * * * backupmanager sudo /usr/sbin/btrbk -q -c /etc/btrbk-pull-backup.conf resume

What if you want scripts for backup and snapshotting, not just commands?

No problem, just remember to adjust the files you had created under /etc/sudoers.d.

Adding an offsite backup

Direct Attached Storage (DAS)

Since we consider the backup server to be our most trusted system, we trust it with backing up its own snapshots to a DAS, which then will be moved offsite.

You can find a sample script for that under backup2offsite.sh.

Cloud storage

Since we do not trust the remote server, options for sending btrfs snapshots to the cloud, while employing client-side encryption, are limited. Obviously, we can’t trust the cloud with holding a decryption key.

If you really want to send btrfs snapshots to the server, you could e.g. set up a LUKS-encrypted container file (of possibly several TB) on the remote server, and give the backup server SSH access to it. The backup server can then open the container using its local encryption key, put a btrfs file system on top, and mount the file system to a local mount point. In theory, btrbk can then be used on the backup server »as usual«.

However, the initial, full backup will most likely take days – and btrfs on the backup server cannot seamlessly resume sending a snapshot, after e.g. internet connection problems broke the upload. You could, however, create and mount the container file locally on the backup server. Have btrbk fill it with the initial, full backup, and then use the more resilient rsync to upload the container file to your cloud storage. Once uploaded, the backup server can open and mount it, and keep sending incremental snapshots.

If you want to follow a more pragmatic and resilient approach that still distrusts the cloud, just consider cloud storage a plain, untrusted file storage, and use backup software like BorgBackup (without server-side installation), restic or Kopia that can resume partial uploads backups.

4. Integrity & restore checks

Monitoring the backup server

While it may be tempting to have a beautiful web dashboard on your backup server, this would also needlessly increase the attack surface. Since we recommend to access the backup server via passwordless SSH login only, we suggest a terminal dashboard. We recommend sampler – have a look at basic dashboard configurations for a backup server, as well as for a file server.

Apart from dashboards, we recommend to configure a monitoring solution in »headless« mode that will alert you via email. We recommend Monit – have a look at a basic configuration file for monitoring a server.

File type verification

On the backup server, there is a (somewhat limited) option to verify of the types inside of a snapshot, to check whether file contents are encrypted gibberish produced by ransomware on the file server.

We’ll use siegfried, a tool that was developed mainly for archivists and can be made to identify file types by searching for signature byte inside files, instead of just looking at file extensions. However, reading just a few bytes only goes so far in thwarting the actions of ransomware.

Here’s a manual workflow:

After installation of siegfried, periodically update file signatures from various reputable sources, and create a combined signature from them:sf -update deluxeroy build -noname -multi positive combined.sigroy add -noname -multi positive -name fddstrong -loc combined.sigroy add -noname -multi positive -name freedesktopstrong -mi freedesktop.org.xml combined.sigroy add -noname -multi positive -name tikastrong -mi tika-mimetypes.xml combined.sigThis will include four different »identifiers«, and request they actually look at file content, on each scan.

This database can now be used to scan a snapshot subvolume inside the backup volume, and create a CSV-formatted report from the scan results. Before the full command line, here’s a short explanation of what some parameters and commands do:

- utcemits all timestamps normalized to UTC-log p,tprints all filenames, as a progrees indicator, and shows the time needed-csvemits the report as a Comma-Separated File (CSV).- We pipe the report through

iconvto drop all non-UTF‑8 characters (these may occur when processing a very old file system with file name encoding errors).sf -utc -log p,t -csv -sig combined.sig /backup/fileserver/photos/.20211231T160501+0100 | iconv -f utf-8 -t utf-8 -c -o /tmp/report.csv -/tmp/report.csvThe resulting CSV file can now be evaluated using a tool of your choice. We’ll use

csvkithere.To print a list of files that could not be recognized by any of the identifiers:

csvsql -v --no-constraints --tables siegfried --query "SELECT filename FROM siegfried WHERE (id='UNKNOWN' AND id_2='UNKNOWN' AND id_3='UNKNOWN' AND id_4='UNKNOWN')" /tmp/report.csvTo calculate the percentage of such files:

csvsql -v --no-constraints --tables siegfried --query "SELECT ROUND(((SELECT COUNT(*) FROM siegfried (WHERE id='UNKNOWN' AND id_2='UNKNOWN' AND id_3='UNKNOWN' AND id_4='UNKNOWN') ) * 100.0) / (SELECT COUNT(*) FROM siegfried),2)" /tmp/report.csv

Manual restore checks (are trainings)

Based on your backup concept spreadsheet, establish a schedule and a routine for periodically and randomly picking representative items from each category, for a restore test.

Add two more columns to your spreadsheet, and maintain them:

- last restore check (date)

- last restored item (full name)

A restore is not complete unless you can actually continue working with restored data. If it’s just a single-file drag & drop operation, followed by opening that file from an application, fine. If it’s drag & drop of a dumped database, followed by a re-import of the dump, and an inspection of the resulting database, the whole path must be walked.

Restore checks are the training ground for everybody authorized to restore data. Just »having shown« an authorized person once »how it works, in principle« is begging for trouble. Without routine in restoring backed up data, emergencies not just way more stressful, but also likely to escalate further, up to the point of permanently losing irreplaceable data. Help others learn how to recover the various categories of backed up data, but insist on everybody taking turns, according to schedule.

5. How to restore data

Try to satisfy most of your restore needs by making the snapshot directories on the file server available to users, read-only.

Restoring from the backup system should be mostly restricted to restore checks, and recovery. Deliberately make frequent restores from the backup server less convenient.

Most SSH tutorials will advise you to disable passphrase-based logins, in favor of authorized keys. In general, this is good advice, especially in times where many people still won’t use password managers for generating safe passphrases.

With respect to the restore user account on the backup server, we advise you to make an exception. Any authorized key file that a malicious software can get hold of is a severe risk for your backups. Therefor, create an account on the backup server that is for restore purposes only. Secure it with a safe, password-manager generated passphrase, and share the passphrase with another trusted person who is not very likely to become unavailable at the same time as you.

Licensing:

This content is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

For your attributions to us please use the word »tuxwise«, and the link https://tuxwise.net.